Substantial effort has been invested in expanding the role of robots into a multitude of new domains, from schools, to grocery stores, to warehouses. But as robots are increasingly expected to work in close quarters or even interact with humans, the challenges in human-robot interaction have become more pronounced. Behaviors that allow robots to engage with humans safely, efficiently, and smoothly are difficult to create, which is exacerbated by human perceptions of and responses to robots, which is itself still an open problem.

The goal of this subteam is to create robot behaviors that make robots more effective teammates and collaborators with humans. We use an interdisciplinary approach, leveraging machine learning, cognitive science, and social psychology to make robots more predictable, legible, and safe around humans. This subteam works with a variety of collaborators to conduct foundational research and run human-subjects studies to validate these approaches.

Contents

1. Projects

1.1 Hierarchical Human-Agent Interaction

Students: Stéphane Aroca-Ouellette

Publications:

- S. Aroca-Ouellette, M. Aroca-Ouellette, U. Biswas, K. Kann, and A. Roncone, “Hierarchical Reinforcement Learning for Ad Hoc Teaming” in Proceedings of the 2023 International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2023. [PDF] [BIB]

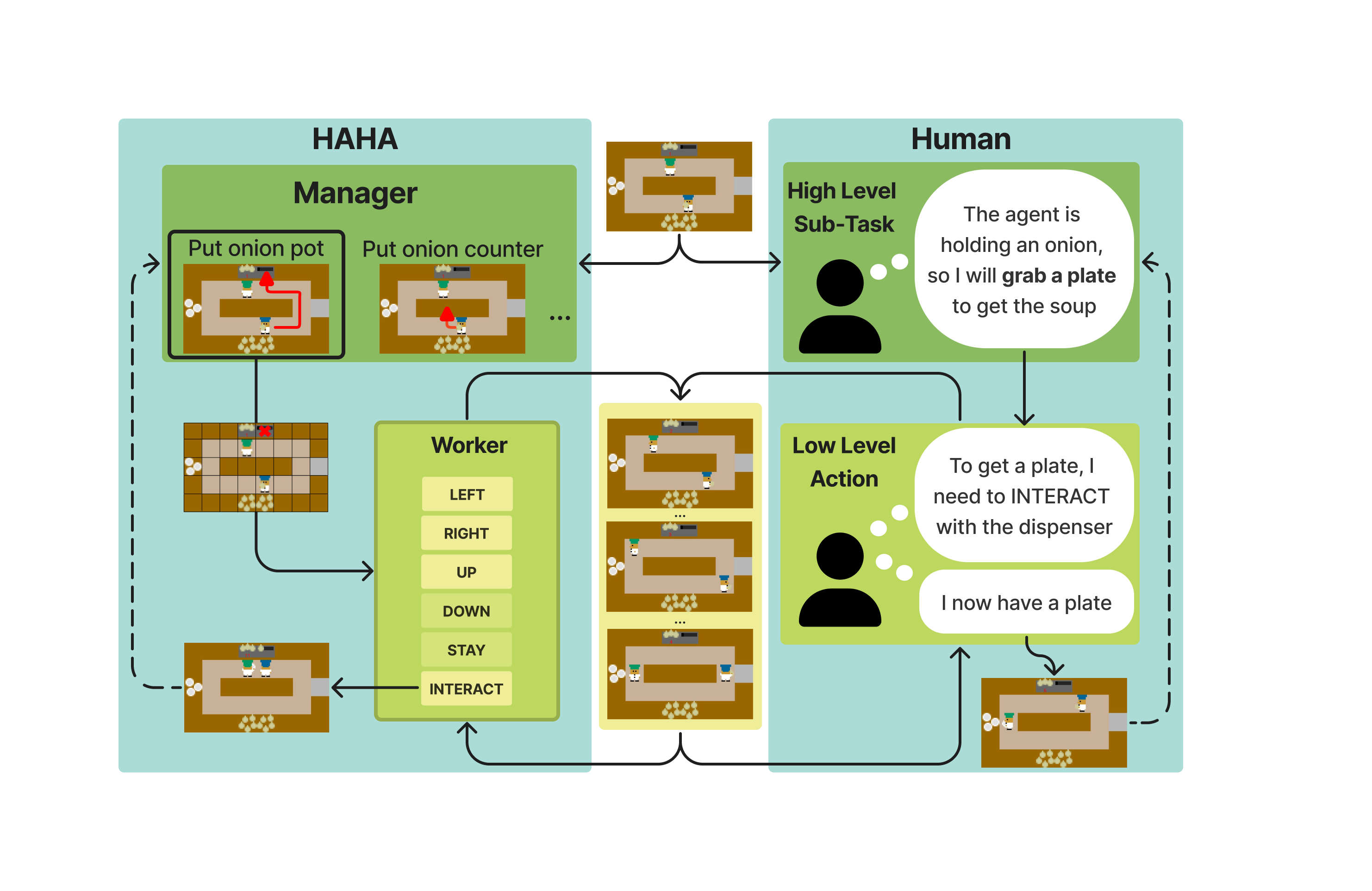

In collaborative tasks, humans excel at adapting to their partners and converging toward an aligned strategy to maximize team success. This is an inherently human skill that current state-of-the-art machine learning models largely lack. We contend that this gap stems from the traditional focus on learning human-agent interaction from low-level primitive actions, whereas human collaboration centers around high-level strategies. To address this, we introduce HAHA: Hierarchical Ad Hoc Agents, a novel framework using hierarchical reinforcement learning to train an agent capable of navigating the intricacies of ad hoc teaming at a level of abstraction more akin to human collaboration. HAHA consists of a Worker and a Manager, which respectively focus on optimizing efficient sub-task completion and high-level team strategies. We evaluate HAHA in the Overcooked environment, demonstrating that it outperforms existing baselines in both quantitative and qualitative metrics, offering improved teamwork, better resilience to environmental shifts, and heightened agent intelligibility. Furthermore, we show that the generalization ability of HAHA extends to changes in the environment and that our structure allows for the induction of new strategies not encountered during training. We posit that the advancements proposed in this paper form a crucial building block toward the realization of safer and more efficient human–AI teams.

Architecture of the Hierarchical Ad Hoc Agents (HAHA). Similar to a human, the manager first selects which high-level task to accomplish next. The low-level worker then takes over to carry out the task.

1.2 Predictability in HRI

Students: Clare Lohrmann Publications

- C. Lohrmann, E. Berg, B. Hayes, and A. Roncone, “Improving Robot Predictability via Trajectory Optimization Using a Virtual Reality Testbed,” in 7th International Workshop on Virtual, Augmented, and Mixed-Reality for Human-Robot Interactions (VAM-HRI), 2024. [PDF] [BIB]

- C. Lohrmann, M. Stull, A. Roncone, and B. Hayes “Generating Pattern-Based Conventions for Predictable Planning in Human-Robot Collaboration,” in ACM Transactions on Human-Robot Interaction, 2024. [PDF] [BIB]

For humans to effectively work with robots, they must be able to predict the actions and behaviors of their robot teammates rather than merely react to them. While there are existing techniques enabling robots to adapt to human behavior, there is a demonstrated need for methods that explicitly improve humans’ ability to understand and predict robot behavior.

Our methods leverage the innate human propensity for pattern recognition and abstraction in order to improve team dynamics in human-robot teams and to make robots more predictable to the humans that work with them. Patterns are a cognitive tool that humans use and rely on often, and the human brain is in many ways primed for pattern recognition and usage. In this research stream we lean into human cognitive tendencies to improve human-robot teaming and human perceptions of their robot teammates.

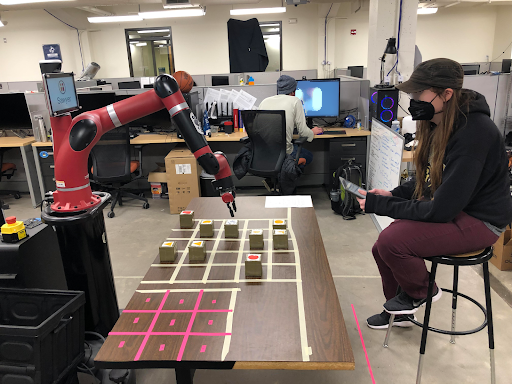

The setup for experiments conducted for our THRI journal article, where participants played a coordination game with a Sawyer robot.

In our most recent work, we introduce PACT, a method for setting conventions for a human-robot team using patterns that humans can recognize. Our method emphasizes using human-visible features of the game setting, such as color, shape, and location to form these patterns. PACT selects a pattern-based convention that is both a deterministic and unique as possible. In this way, if the human knows the pattern, they will know what comes next (determinism), and the robot’s behavior cannot be explained by another pattern (unique). Our experiment shows that by emphasizing predictability via pattern-based conventions, we can not only improve human-robot performance on a coordination task, but PACT also increases positive perceptions of the robot and its contributions to the team.

1.3 Interactive Task and Role Assignment for Human Robot Collaboration

Postdoc: Jake Brawer Students: Kaleb Bishop

Publications*

- J. Brawer, K. Bishop, B. Hayes, and A. Roncone, “Towards A Natural Language Interface for Flexible Multi-Agent Task Assignment,” in 2023 AAAI Fall Symposium on Artificial Intelligence for Human-Robot Interaction (AI-HRI), 2023. [PDF] [BIB]

Task assignment and scheduling (TAS) algorithms are powerful tools for coordinating large teams of robots, AIs, or humans with optimality and safety guarantees. However, standard optiization-based techniques for TAS require deep technical knowledge to design, and are far too rigid to handle the complexities of many real-world, high-stakes tasks. Large language models (LLMs) like ChatGPT, in contrast, are extraordinarily flexible and capbale of wide-ranging feats of generalized reasoning, though lack these desired gaurantees. Our ongoing work seeks to narrow the gap between human ingenuity and algorithmic efficiency by enabling users to shape, update, and modify TAS systems entirely via LLM-mediated, interactive dialogue. We are confident that this approach will not only enhance the efficiency and flexibility of task management, but also democratizes the use of advanced TAS algorithms.

1.4 Student-Robot Teaming and Robots in the Classroom

Students: Kaleb Bishop

Recent work in cognitive learning theory and social psychology present new opportunities to improve and rethink the application of robots in the classroom. Why are robots such powerful agents of tutoring, and how can we use their unique social roles to develop student skills in reading, STEM, and beyond, and address educational inequality across lines of race, gender, and ability? Current projects, conducted in conjunction with the NSF AI Institute for Student-AI Teaming (iSAT) and the Engineering Education and AI-Augmented Learning IRT, include study into HRI design considerations for students of color and developing a robot tutoring system to address grounded reading skills.

1.5 Robotic Intent Signaling System

Students: Mitchell Scott, Shreyas Kadekodi, Caleb Escobedo, Clare Lohrmann

The creation of information channels between robots and humans is complicated and fraught with potential pitfalls; the goal being a simplistic system that effectively carries information from one party to another consistently and clearly. While much work has gone into creating robot signals and modes of communication, most research seeks to answer the question “Does this system communicate what it was intended to?”, be it the robot’s path, intent, or status. Research is conducted in a controlled environment, so the applicability of these systems to environments constrained by space or time is unknown. We are currently examining the effectiveness of a projection-based trajectory system in a cluttered and fast paced environment. Through this work we hope to determine when this method of communication is most effective, and if/when the environment becomes too crowded or too fast moving for the communication to be effective.

2. Publications

2025 Computers in Human Behavior: Artificial Humans

2025 International Joint Conference on Artificial Intelligence [IJCAI]

Montreal, Canada, August 16-22

2025 International Conference on Robot and Human Interactive Communication (RO-MAN)

Eindhoven, Netherlands, August 25-29

2025 RSS Workshop on Human-in-the-Loop Robot Learning: Teaching, Correcting, and Adapting

Los Angeles, California, USA, June 25

2025 RSS Workshop on Scalable and Resilient Multi-Robot Systems: Decision-Making, Coordination, and Learning

Los Angeles, California, USA, June 25

2024 IEEE International Conference on Robot and Human Interactive Communication [RO-MAN]

Pasadena, CA, USA, August 26-30

2024 ACM Transactions on Human-Robot Interaction [THRI]

2024 ACM/IEEE International Conference on Human-Robot Interaction [HRI]

Boulder, CO, USA, March 11-14

2024 7th International Workshop on Virtual, Augmented, and Mixed-Reality at HRI '24 [VAM-HRI]

Boulder, CO, USA, March 11-14

2024 HRI'24 Workshop on Causal Learning for Human-Robot Interaction

Boulder, CO, USA, March 11-14

2024 7th International Workshop on Virtual, Augmented, and Mixed-Reality at HRI '24 [VAM-HRI]

Boulder, CO, USA, March 11-14

2023 AAAI Fall Symposium on Artificial Intelligence for Human-Robot Interaction [AI-HRI]

Arlington, VA, USA, Oct 25-27

2023 International Conference on Autonomous Agents and Multiagent Systems [AAMAS]

London, UK, May 28-June 02

2023 ACM/IEEE International Conference on Human-Robot Interaction [HRI]

Stockholm, Sweden, March 13-16

2023 6th International Workshop on Virtual, Augmented, and Mixed-Reality at HRI '23 [VAM-HRI]

Stockholm, Sweden, March 13

2023 Workshop on Language-Based AI Agent Interaction with Children [AIAIC]

Los Angeles, USA, February 21

2022 IEEE International Conference on Robot & Human Interactive Communication [RO-MAN]

Naples, Italy, August 29

2022 Frontiers in Robotics and AI

2021 ACM International Conference on Human-Robot Interaction [HRI], Workshop on Robots for Learning

Boulder, CO, U.S.A., March 12

2019 2nd ICRA International Workshop on Computational Models of Affordance in Robotics

Montreal, Canada, May 20-24

An Affordance-based Action Planner for On-line and Concurrent Human-Robot Collaborative Assembly

2018 IEEE/RSJ International Conference on Intelligent Robots and Systems [IROS]

Madrid, Spain, October 1-5

2018 IEEE/RSJ International Conference on Intelligent Robots and Systems [IROS]

Madrid, Spain, October 1-5

2018 IEEE/RSJ International Conference on Intelligent Robots and Systems [IROS]

Madrid, Spain, October 1-5

2018 International Conference on Autonomous Agents and Multiagent Systems [AAMAS]

Stockholm, Sweden, July 11-13

2017 IEEE International Conference on Robotics and Automation [ICRA]

Singapore, Singapore May 29-June 3